In this notebook I am going to re-implement YOLOV2 as described in the paper

YOLO9000: Better, Faster, Stronger . The goal is to replicate the model as described in the paper and train it on the

VOC 2012 dataset.

Introduction Most of the code, in this notbook comes from a series of blog posts by Yumi. I just followed his posts to get things working. The original blog post uses Tensorflow 1.x so I had to change a few things to make it work but most of the code remains the same. I am linking all his blog posts here, and I highly recommend taking a look at it as it explains everything in much more detail.

Yumi’s Blog Posts with explanation Google colab with end to end training and evaluation on VOC 2012 I followed Yumi’s blogs to replicate YOLOV2 for VOC 2012 dataset. If you are looking for a consolidated python notebook with everything working, you can clone this Google Colab notebook.

https://colab.research.google.com/drive/14mPj3NYg_lJwWCRclzgPzdpKXoQutxUb?usp=sharing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

import tensorflow as tf

import matplotlib.pyplot as plt # for plotting the images

%matplotlib inline

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

from google.colab import drive

drive.mount('/content/gdrive')

Drive already mounted at /content/gdrive; to attempt to forcibly remount, call drive.mount("/content/gdrive" , force_remount=True).

Data Preprocessing I would be using

VOC 2012 dataset as its size is manageable so it would be easy to run it using Google Colab.

First, I download and extract the dataset.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

!wget http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

--2020-07-06 20 :57:53-- http://host.robots.ox.ac.uk/pascal/VOC/voc2012/VOCtrainval_11-May-2012.tar

Resolving host.robots.ox.ac.uk (host.robots.ox.ac.uk)... 129.67 .94 .152

Connecting to host.robots.ox.ac.uk (host.robots.ox.ac.uk)|129.67.94.152|:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1999639040 (1.9G) [application/x-tar]

Saving to: ‘VOCtrainval_11-May-2012.tar.1’

VOCtrainval_11-May- 100 %[===================>] 1. 86G 9. 38MB/s in 3m 35s

2020 -07 -06 21 :01:28 (8.88 MB/s) - ‘VOCtrainval_11-May-2012.tar.1’ saved [1999639040/1999639040]

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

!tar xvf VOCtrainval_11-May-2012.tar

Next, we define a function that parses the annotations from the XML files and stores it in an array.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

import os

import xml.etree.ElementTree as ET

def parse_annotation(ann_dir, img_dir, labels=[]):

all_imgs = []

seen_labels = {}

for ann in sorted(os.listdir(ann_dir)):

if "xml" not in ann:

continue

img = {'object':[]}

tree = ET.parse(ann_dir + ann)

for elem in tree.iter():

if 'filename' in elem.tag:

path_to_image = img_dir + elem.text

img['filename'] = path_to_image

## make sure that the image exists:

if not os.path.exists(path_to_image):

assert False, "file does not exist!\n{}".format(path_to_image)

if 'width' in elem.tag:

img['width'] = int(elem.text)

if 'height' in elem.tag:

img['height'] = int(elem.text)

if 'object' in elem.tag or 'part' in elem.tag:

obj = {}

for attr in list(elem):

if 'name' in attr.tag:

obj['name'] = attr.text

if len(labels) > 0 and obj['name'] not in labels:

break

else:

img['object'] += [obj]

if obj['name'] in seen_labels:

seen_labels[obj['name']] += 1

else:

seen_labels[obj['name']] = 1

if 'bndbox' in attr.tag:

for dim in list(attr):

if 'xmin' in dim.tag:

obj['xmin'] = int(round(float(dim.text)))

if 'ymin' in dim.tag:

obj['ymin'] = int(round(float(dim.text)))

if 'xmax' in dim.tag:

obj['xmax'] = int(round(float(dim.text)))

if 'ymax' in dim.tag:

obj['ymax'] = int(round(float(dim.text)))

if len(img['object']) > 0:

all_imgs += [img]

return all_imgs, seen_labels

We prepare the arrays with training_image and seen_train_labels for the whole dataset.

As opposed to YOLOV1, YOLOV2 uses K-means clustering to find the best anchor box sizes for the given dataset.

The ANCHORS defined below are taken from the following blog:

Part 1 Object Detection using YOLOv2 on Pascal VOC2012 - anchor box clustering .

Instead of rerunning the K-means algorithm again, we use the ANCHORS obtained by

Yumi as it is.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

import numpy as np

## Parse annotations

train_image_folder = "VOCdevkit/VOC2012/JPEGImages/"

train_annot_folder = "VOCdevkit/VOC2012/Annotations/"

ANCHORS = np.array([1.07709888, 1.78171903, # anchor box 1, width , height

2.71054693, 5.12469308, # anchor box 2, width, height

10.47181473, 10.09646365, # anchor box 3, width, height

5.48531347, 8.11011331]) # anchor box 4, width, height

LABELS = ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle',

'bus', 'car', 'cat', 'chair', 'cow',

'diningtable','dog', 'horse', 'motorbike', 'person',

'pottedplant','sheep', 'sofa', 'train', 'tvmonitor']

train_image, seen_train_labels = parse_annotation(train_annot_folder,train_image_folder, labels=LABELS)

print("N train = {}".format(len(train_image)))

N train = 17125

Next, we define a ImageReader class to process an image. It takes in an image and returns the resized image and all the objects in the image.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

import copy

import cv2

class ImageReader(object):

def __init__(self,IMAGE_H,IMAGE_W, norm=None):

self.IMAGE_H = IMAGE_H

self.IMAGE_W = IMAGE_W

self.norm = norm

def encode_core(self,image, reorder_rgb=True):

image = cv2.resize(image, (self.IMAGE_H, self.IMAGE_W))

if reorder_rgb:

image = image[:,:,::-1]

if self.norm is not None:

image = self.norm(image)

return(image)

def fit(self,train_instance):

'''

read in and resize the image, annotations are resized accordingly.

-- Input --

train_instance : dictionary containing filename, height, width and object

{'filename': 'ObjectDetectionRCNN/VOCdevkit/VOC2012/JPEGImages/2008_000054.jpg',

'height': 333,

'width': 500,

'object': [{'name': 'bird',

'xmax': 318,

'xmin': 284,

'ymax': 184,

'ymin': 100},

{'name': 'bird',

'xmax': 198,

'xmin': 112,

'ymax': 209,

'ymin': 146}]

}

'''

if not isinstance(train_instance,dict):

train_instance = {'filename':train_instance}

image_name = train_instance['filename']

image = cv2.imread(image_name)

h, w, c = image.shape

if image is None: print('Cannot find ', image_name)

image = self.encode_core(image, reorder_rgb=True)

if "object" in train_instance.keys():

all_objs = copy.deepcopy(train_instance['object'])

# fix object's position and size

for obj in all_objs:

for attr in ['xmin', 'xmax']:

obj[attr] = int(obj[attr] * float(self.IMAGE_W) / w)

obj[attr] = max(min(obj[attr], self.IMAGE_W), 0)

for attr in ['ymin', 'ymax']:

obj[attr] = int(obj[attr] * float(self.IMAGE_H) / h)

obj[attr] = max(min(obj[attr], self.IMAGE_H), 0)

else:

return image

return image, all_objs

Here’s a sample usage of the ImageReader class.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def normalize(image):

return image / 255.

print("*"*30)

print("Input")

timage = train_image[0]

for key, v in timage.items():

print(" {}: {}".format(key,v))

print("*"*30)

print("Output")

inputEncoder = ImageReader(IMAGE_H=416,IMAGE_W=416, norm=normalize)

image, all_objs = inputEncoder.fit(timage)

print(" {}".format(all_objs))

plt.imshow(image)

plt.title("image.shape={}".format(image.shape))

plt.show()

******************************

Input

object: [{'name': 'person' , 'xmin': 174 , 'ymin': 101 , 'xmax': 349 , 'ymax': 351 }]

filename: VOCdevkit/VOC2012/JPEGImages/2007_000027.jpg

width: 486

height: 500

******************************

Output

[{'name': 'person' , 'xmin': 148 , 'ymin': 84 , 'xmax': 298 , 'ymax': 292 }]

Next, we define BestAnchorBoxFinder which finds the best anchor box for a particular object. This is done by finding the anchor box with the highest IOU(Intersection over Union) with the bounding box of the object.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

class BestAnchorBoxFinder(object):

def __init__(self, ANCHORS):

'''

ANCHORS: a np.array of even number length e.g.

_ANCHORS = [4,2, ## width=4, height=2, flat large anchor box

2,4, ## width=2, height=4, tall large anchor box

1,1] ## width=1, height=1, small anchor box

'''

self.anchors = [BoundBox(0, 0, ANCHORS[2*i], ANCHORS[2*i+1])

for i in range(int(len(ANCHORS)//2))]

def _interval_overlap(self,interval_a, interval_b):

x1, x2 = interval_a

x3, x4 = interval_b

if x3 < x1:

if x4 < x1:

return 0

else:

return min(x2,x4) - x1

else:

if x2 < x3:

return 0

else:

return min(x2,x4) - x3

def bbox_iou(self,box1, box2):

intersect_w = self._interval_overlap([box1.xmin, box1.xmax], [box2.xmin, box2.xmax])

intersect_h = self._interval_overlap([box1.ymin, box1.ymax], [box2.ymin, box2.ymax])

intersect = intersect_w * intersect_h

w1, h1 = box1.xmax-box1.xmin, box1.ymax-box1.ymin

w2, h2 = box2.xmax-box2.xmin, box2.ymax-box2.ymin

union = w1*h1 + w2*h2 - intersect

return float(intersect) / union

def find(self,center_w, center_h):

# find the anchor that best predicts this box

best_anchor = -1

max_iou = -1

# each Anchor box is specialized to have a certain shape.

# e.g., flat large rectangle, or small square

shifted_box = BoundBox(0, 0,center_w, center_h)

## For given object, find the best anchor box!

for i in range(len(self.anchors)): ## run through each anchor box

anchor = self.anchors[i]

iou = self.bbox_iou(shifted_box, anchor)

if max_iou < iou:

best_anchor = i

max_iou = iou

return(best_anchor,max_iou)

class BoundBox:

def __init__(self, xmin, ymin, xmax, ymax, confidence=None,classes=None):

self.xmin, self.ymin = xmin, ymin

self.xmax, self.ymax = xmax, ymax

## the code below are used during inference

# probability

self.confidence = confidence

# class probaiblities [c1, c2, .. cNclass]

self.set_class(classes)

def set_class(self,classes):

self.classes = classes

self.label = np.argmax(self.classes)

def get_label(self):

return(self.label)

def get_score(self):

return(self.classes[self.label])

Here’s a sample usage of the BestAnchorBoxFinder class.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

# Anchor box width and height found in https://fairyonice.github.io/Part_1_Object_Detection_with_Yolo_for_VOC_2014_data_anchor_box_clustering.html

_ANCHORS01 = np.array([0.08285376, 0.13705531,

0.20850361, 0.39420716,

0.80552421, 0.77665105,

0.42194719, 0.62385487])

print(".."*40)

print("The three example anchor boxes:")

count = 0

for i in range(0,len(_ANCHORS01),2):

print("anchor box index={}, w={}, h={}".format(count,_ANCHORS01[i],_ANCHORS01[i+1]))

count += 1

print(".."*40)

print("Allocate bounding box of various width and height into the three anchor boxes:")

babf = BestAnchorBoxFinder(_ANCHORS01)

for w in range(1,9,2):

w /= 10.

for h in range(1,9,2):

h /= 10.

best_anchor,max_iou = babf.find(w,h)

print("bounding box (w = {}, h = {}) --> best anchor box index = {}, iou = {:03.2f}".format(

w,h,best_anchor,max_iou))

................................................................................

The three example anchor boxes:

anchor box index=0, w=0.08285376, h=0.13705531

anchor box index=1, w=0.20850361, h=0.39420716

anchor box index=2, w=0.80552421, h=0.77665105

anchor box index=3, w=0.42194719, h=0.62385487

................................................................................

Allocate bounding box of various width and height into the three anchor boxes:

bounding box (w = 0.1 , h = 0.1 ) --> best anchor box index = 0 , iou = 0.63

bounding box (w = 0.1 , h = 0.3 ) --> best anchor box index = 0 , iou = 0.38

bounding box (w = 0.1 , h = 0.5 ) --> best anchor box index = 1 , iou = 0.42

bounding box (w = 0.1 , h = 0.7 ) --> best anchor box index = 1 , iou = 0.35

bounding box (w = 0.3 , h = 0.1 ) --> best anchor box index = 0 , iou = 0.25

bounding box (w = 0.3 , h = 0.3 ) --> best anchor box index = 1 , iou = 0.57

bounding box (w = 0.3 , h = 0.5 ) --> best anchor box index = 3 , iou = 0.57

bounding box (w = 0.3 , h = 0.7 ) --> best anchor box index = 3 , iou = 0.65

bounding box (w = 0.5 , h = 0.1 ) --> best anchor box index = 1 , iou = 0.19

bounding box (w = 0.5 , h = 0.3 ) --> best anchor box index = 3 , iou = 0.44

bounding box (w = 0.5 , h = 0.5 ) --> best anchor box index = 3 , iou = 0.70

bounding box (w = 0.5 , h = 0.7 ) --> best anchor box index = 3 , iou = 0.75

bounding box (w = 0.7 , h = 0.1 ) --> best anchor box index = 1 , iou = 0.16

bounding box (w = 0.7 , h = 0.3 ) --> best anchor box index = 3 , iou = 0.37

bounding box (w = 0.7 , h = 0.5 ) --> best anchor box index = 2 , iou = 0.56

bounding box (w = 0.7 , h = 0.7 ) --> best anchor box index = 2 , iou = 0.78

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def rescale_centerxy(obj,config):

'''

obj: dictionary containing xmin, xmax, ymin, ymax

config : dictionary containing IMAGE_W, GRID_W, IMAGE_H and GRID_H

'''

center_x = .5*(obj['xmin'] + obj['xmax'])

center_x = center_x / (float(config['IMAGE_W']) / config['GRID_W'])

center_y = .5*(obj['ymin'] + obj['ymax'])

center_y = center_y / (float(config['IMAGE_H']) / config['GRID_H'])

return(center_x,center_y)

def rescale_cebterwh(obj,config):

'''

obj: dictionary containing xmin, xmax, ymin, ymax

config : dictionary containing IMAGE_W, GRID_W, IMAGE_H and GRID_H

'''

# unit: grid cell

center_w = (obj['xmax'] - obj['xmin']) / (float(config['IMAGE_W']) / config['GRID_W'])

# unit: grid cell

center_h = (obj['ymax'] - obj['ymin']) / (float(config['IMAGE_H']) / config['GRID_H'])

return(center_w,center_h)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

obj = {'xmin': 150, 'ymin': 84, 'xmax': 300, 'ymax': 294}

config = {"IMAGE_W":416,"IMAGE_H":416,"GRID_W":13,"GRID_H":13}

center_x, center_y = rescale_centerxy(obj,config)

center_w, center_h = rescale_cebterwh(obj,config)

print("cebter_x abd cebter_w should range between 0 and {}".format(config["GRID_W"]))

print("cebter_y abd cebter_h should range between 0 and {}".format(config["GRID_H"]))

print("center_x = {:06.3f} range between 0 and {}".format(center_x, config["GRID_W"]))

print("center_y = {:06.3f} range between 0 and {}".format(center_y, config["GRID_H"]))

print("center_w = {:06.3f} range between 0 and {}".format(center_w, config["GRID_W"]))

print("center_h = {:06.3f} range between 0 and {}".format(center_h, config["GRID_H"]))

cebter_x abd cebter_w should range between 0 and 13

cebter_y abd cebter_h should range between 0 and 13

center_x = 07.031 range between 0 and 13

center_y = 05.906 range between 0 and 13

center_w = 04.688 range between 0 and 13

center_h = 06.562 range between 0 and 13

Next, we define a custom Batch generator to get a batch of 16 images and its corresponding bounding boxes.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

from tensorflow.keras.utils import Sequence

class SimpleBatchGenerator(Sequence):

def __init__(self, images, config, norm=None, shuffle=True):

'''

config : dictionary containing necessary hyper parameters for traning. e.g.,

{

'IMAGE_H' : 416,

'IMAGE_W' : 416,

'GRID_H' : 13,

'GRID_W' : 13,

'LABELS' : ['aeroplane', 'bicycle', 'bird', 'boat', 'bottle',

'bus', 'car', 'cat', 'chair', 'cow',

'diningtable','dog', 'horse', 'motorbike', 'person',

'pottedplant','sheep', 'sofa', 'train', 'tvmonitor'],

'ANCHORS' : array([ 1.07709888, 1.78171903,

2.71054693, 5.12469308,

10.47181473, 10.09646365,

5.48531347, 8.11011331]),

'BATCH_SIZE' : 16,

'TRUE_BOX_BUFFER' : 50,

}

'''

self.config = config

self.config["BOX"] = int(len(self.config['ANCHORS'])/2)

self.config["CLASS"] = len(self.config['LABELS'])

self.images = images

self.bestAnchorBoxFinder = BestAnchorBoxFinder(config['ANCHORS'])

self.imageReader = ImageReader(config['IMAGE_H'],config['IMAGE_W'],norm=norm)

self.shuffle = shuffle

if self.shuffle:

np.random.shuffle(self.images)

def __len__(self):

return int(np.ceil(float(len(self.images))/self.config['BATCH_SIZE']))

def __getitem__(self, idx):

'''

== input ==

idx : non-negative integer value e.g., 0

== output ==

x_batch: The numpy array of shape (BATCH_SIZE, IMAGE_H, IMAGE_W, N channels).

x_batch[iframe,:,:,:] contains a iframeth frame of size (IMAGE_H,IMAGE_W).

y_batch:

The numpy array of shape (BATCH_SIZE, GRID_H, GRID_W, BOX, 4 + 1 + N classes).

BOX = The number of anchor boxes.

y_batch[iframe,igrid_h,igrid_w,ianchor,:4] contains (center_x,center_y,center_w,center_h)

of ianchorth anchor at grid cell=(igrid_h,igrid_w) if the object exists in

this (grid cell, anchor) pair, else they simply contain 0.

y_batch[iframe,igrid_h,igrid_w,ianchor,4] contains 1 if the object exists in this

(grid cell, anchor) pair, else it contains 0.

y_batch[iframe,igrid_h,igrid_w,ianchor,5 + iclass] contains 1 if the iclass^th

class object exists in this (grid cell, anchor) pair, else it contains 0.

b_batch:

The numpy array of shape (BATCH_SIZE, 1, 1, 1, TRUE_BOX_BUFFER, 4).

b_batch[iframe,1,1,1,ibuffer,ianchor,:] contains ibufferth object's

(center_x,center_y,center_w,center_h) in iframeth frame.

If ibuffer > N objects in iframeth frame, then the values are simply 0.

TRUE_BOX_BUFFER has to be some large number, so that the frame with the

biggest number of objects can also record all objects.

The order of the objects do not matter.

This is just a hack to easily calculate loss.

'''

l_bound = idx*self.config['BATCH_SIZE']

r_bound = (idx+1)*self.config['BATCH_SIZE']

if r_bound > len(self.images):

r_bound = len(self.images)

l_bound = r_bound - self.config['BATCH_SIZE']

instance_count = 0

## prepare empty storage space: this will be output

x_batch = np.zeros((r_bound - l_bound, self.config['IMAGE_H'], self.config['IMAGE_W'], 3)) # input images

b_batch = np.zeros((r_bound - l_bound, 1 , 1 , 1 , self.config['TRUE_BOX_BUFFER'], 4)) # list of self.config['TRUE_self.config['BOX']_BUFFER'] GT boxes

y_batch = np.zeros((r_bound - l_bound, self.config['GRID_H'], self.config['GRID_W'], self.config['BOX'], 4+1+len(self.config['LABELS']))) # desired network output

for train_instance in self.images[l_bound:r_bound]:

# augment input image and fix object's position and size

img, all_objs = self.imageReader.fit(train_instance)

# construct output from object's x, y, w, h

true_box_index = 0

for obj in all_objs:

if obj['xmax'] > obj['xmin'] and obj['ymax'] > obj['ymin'] and obj['name'] in self.config['LABELS']:

center_x, center_y = rescale_centerxy(obj,self.config)

grid_x = int(np.floor(center_x))

grid_y = int(np.floor(center_y))

if grid_x < self.config['GRID_W'] and grid_y < self.config['GRID_H']:

obj_indx = self.config['LABELS'].index(obj['name'])

center_w, center_h = rescale_cebterwh(obj,self.config)

box = [center_x, center_y, center_w, center_h]

best_anchor,max_iou = self.bestAnchorBoxFinder.find(center_w, center_h)

# assign ground truth x, y, w, h, confidence and class probs to y_batch

# it could happen that the same grid cell contain 2 similar shape objects

# as a result the same anchor box is selected as the best anchor box by the multiple objects

# in such ase, the object is over written

y_batch[instance_count, grid_y, grid_x, best_anchor, 0:4] = box # center_x, center_y, w, h

y_batch[instance_count, grid_y, grid_x, best_anchor, 4 ] = 1. # ground truth confidence is 1

y_batch[instance_count, grid_y, grid_x, best_anchor, 5+obj_indx] = 1 # class probability of the object

# assign the true box to b_batch

b_batch[instance_count, 0, 0, 0, true_box_index] = box

true_box_index += 1

true_box_index = true_box_index % self.config['TRUE_BOX_BUFFER']

x_batch[instance_count] = img

# increase instance counter in current batch

instance_count += 1

return [x_batch, b_batch], y_batch

def on_epoch_end(self):

if self.shuffle:

np.random.shuffle(self.images)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

GRID_H, GRID_W = 13 , 13

ANCHORS = _ANCHORS01

ANCHORS[::2] = ANCHORS[::2]*GRID_W

ANCHORS[1::2] = ANCHORS[1::2]*GRID_H

ANCHORS

array([ 1.07709888 , 1.78171903 , 2.71054693 , 5.12469308 , 10.47181473 ,

10.09646365 , 5.48531347 , 8.11011331 ])

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

IMAGE_H, IMAGE_W = 416, 416

BATCH_SIZE = 16

TRUE_BOX_BUFFER = 50

BOX = int(len(ANCHORS)/2)

CLASS = len(LABELS)

generator_config = {

'IMAGE_H' : IMAGE_H,

'IMAGE_W' : IMAGE_W,

'GRID_H' : GRID_H,

'GRID_W' : GRID_W,

'BOX' : BOX,

'LABELS' : LABELS,

'ANCHORS' : ANCHORS,

'BATCH_SIZE' : BATCH_SIZE,

'TRUE_BOX_BUFFER' : TRUE_BOX_BUFFER,

}

train_batch_generator = SimpleBatchGenerator(train_image, generator_config,

norm=normalize, shuffle=True)

[x_batch,b_batch],y_batch = train_batch_generator.__getitem__(idx=3)

print("x_batch: (BATCH_SIZE, IMAGE_H, IMAGE_W, N channels) = {}".format(x_batch.shape))

print("y_batch: (BATCH_SIZE, GRID_H, GRID_W, BOX, 4 + 1 + N classes) = {}".format(y_batch.shape))

print("b_batch: (BATCH_SIZE, 1, 1, 1, TRUE_BOX_BUFFER, 4) = {}".format(b_batch.shape))

x_batch: (BATCH_SIZE, IMAGE_H, IMAGE_W, N channels) = (16, 416 , 416 , 3 )

y_batch: (BATCH_SIZE, GRID_H, GRID_W, BOX, 4 + 1 + N classes) = (16, 13 , 13 , 4 , 25 )

b_batch: (BATCH_SIZE, 1 , 1 , 1 , TRUE_BOX_BUFFER, 4 ) = (16, 1 , 1 , 1 , 50 , 4 )

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

iframe= 1

def check_object_in_grid_anchor_pair(irow):

for igrid_h in range(generator_config["GRID_H"]):

for igrid_w in range(generator_config["GRID_W"]):

for ianchor in range(generator_config["BOX"]):

vec = y_batch[irow,igrid_h,igrid_w,ianchor,:]

C = vec[4] ## ground truth confidence

if C == 1:

class_nm = np.array(LABELS)[np.where(vec[5:])]

assert len(class_nm) == 1

print("igrid_h={:02.0f},igrid_w={:02.0f},iAnchor={:02.0f}, {}".format(

igrid_h,igrid_w,ianchor,class_nm[0]))

check_object_in_grid_anchor_pair(iframe)

igrid_h =11 ,igrid_w=06 ,iAnchor=00 , person

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def plot_image_with_grid_cell_partition(irow):

img = x_batch[irow]

plt.figure(figsize=(15,15))

plt.imshow(img)

for wh in ["W","H"]:

GRID_ = generator_config["GRID_" + wh] ## 13

IMAGE_ = generator_config["IMAGE_" + wh] ## 416

if wh == "W":

pltax = plt.axvline

plttick = plt.xticks

else:

pltax = plt.axhline

plttick = plt.yticks

for count in range(GRID_):

l = IMAGE_*count/GRID_

pltax(l,color="yellow",alpha=0.3)

plttick([(i + 0.5)*IMAGE_/GRID_ for i in range(GRID_)],

["iGRID{}={}".format(wh,i) for i in range(GRID_)])

def plot_grid(irow):

import seaborn as sns

color_palette = list(sns.xkcd_rgb.values())

iobj = 0

for igrid_h in range(generator_config["GRID_H"]):

for igrid_w in range(generator_config["GRID_W"]):

for ianchor in range(generator_config["BOX"]):

vec = y_batch[irow,igrid_h,igrid_w,ianchor,:]

C = vec[4] ## ground truth confidence

if C == 1:

class_nm = np.array(LABELS)[np.where(vec[5:])]

x, y, w, h = vec[:4]

multx = generator_config["IMAGE_W"]/generator_config["GRID_W"]

multy = generator_config["IMAGE_H"]/generator_config["GRID_H"]

c = color_palette[iobj]

iobj += 1

xmin = x - 0.5*w

ymin = y - 0.5*h

xmax = x + 0.5*w

ymax = y + 0.5*h

# center

plt.text(x*multx,y*multy,

"X",color=c,fontsize=23)

plt.plot(np.array([xmin,xmin])*multx,

np.array([ymin,ymax])*multy,color=c,linewidth=10)

plt.plot(np.array([xmin,xmax])*multx,

np.array([ymin,ymin])*multy,color=c,linewidth=10)

plt.plot(np.array([xmax,xmax])*multx,

np.array([ymax,ymin])*multy,color=c,linewidth=10)

plt.plot(np.array([xmin,xmax])*multx,

np.array([ymax,ymax])*multy,color=c,linewidth=10)

plot_image_with_grid_cell_partition(iframe)

plot_grid(iframe)

plt.show()

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

for irow in range(5, 10):

print("-"*30)

check_object_in_grid_anchor_pair(irow)

plot_image_with_grid_cell_partition(irow)

plot_grid(irow)

plt.show()

------------------------------

igrid_h=07,igrid_w=05,iAnchor=03, person

igrid_h=08,igrid_w=05,iAnchor=03, person

igrid_h=09,igrid_w=05,iAnchor=02, sofa

igrid_h=08,igrid_w=06,iAnchor=02, bird

igrid_h=09,igrid_w=08,iAnchor=02, sofa

igrid_h=05,igrid_w=06,iAnchor=02, dog

igrid_h=06,igrid_w=06,iAnchor=02, car

Next, I am adding a function to prepare the input and the output. The input is a (448, 448, 3) image and the output is a (7, 7, 30) tensor. The output is based on S x S x (B * 5 +C).

S X S is the number of grids

B is the number of bounding boxes per grid

C is the number of predictions per grid

Training the model Next, I am defining a custom generator that returns a batch of input and outputs.

Next, we create instances of the generator for our training and validation sets.

Define a custom output layer We need to reshape the output from the model so we define a custom Keras layer for it.

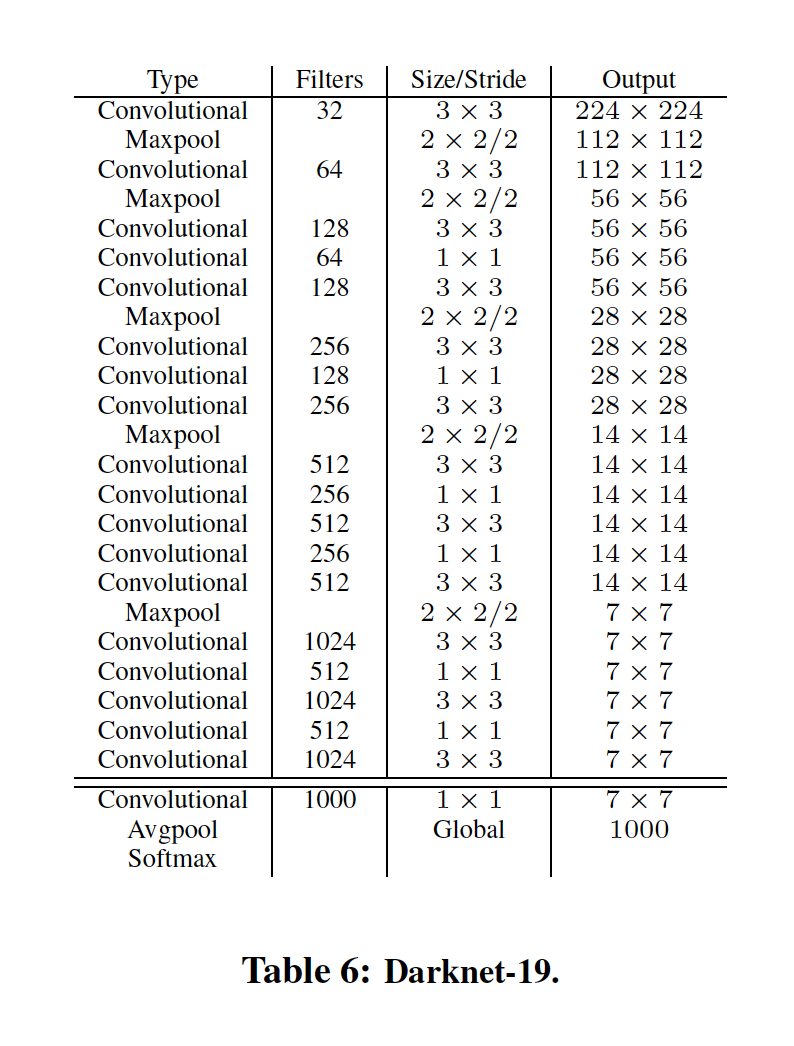

Defining the YOLO model. Next, we define the model as described in the original paper.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

from tensorflow.keras.models import Sequential, Model

from tensorflow.keras.layers import Reshape, Activation, Conv2D, Input, MaxPooling2D, BatchNormalization, Flatten, Dense, Lambda, LeakyReLU, concatenate

from tensorflow.keras.callbacks import EarlyStopping, ModelCheckpoint, TensorBoard

from tensorflow.keras.optimizers import SGD, Adam, RMSprop

import tensorflow.keras.backend as K

import tensorflow as tf

# the function to implement the orgnization layer (thanks to github.com/allanzelener/YAD2K)

def space_to_depth_x2(x):

return tf.nn.space_to_depth(x, block_size=2)

input_image = Input(shape=(IMAGE_H, IMAGE_W, 3))

true_boxes = Input(shape=(1, 1, 1, TRUE_BOX_BUFFER , 4))

# Layer 1

x = Conv2D(32, (3,3), strides=(1,1), padding='same', name='conv_1', use_bias=False)(input_image)

x = BatchNormalization(name='norm_1')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 2

x = Conv2D(64, (3,3), strides=(1,1), padding='same', name='conv_2', use_bias=False)(x)

x = BatchNormalization(name='norm_2')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 3

x = Conv2D(128, (3,3), strides=(1,1), padding='same', name='conv_3', use_bias=False)(x)

x = BatchNormalization(name='norm_3')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 4

x = Conv2D(64, (1,1), strides=(1,1), padding='same', name='conv_4', use_bias=False)(x)

x = BatchNormalization(name='norm_4')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 5

x = Conv2D(128, (3,3), strides=(1,1), padding='same', name='conv_5', use_bias=False)(x)

x = BatchNormalization(name='norm_5')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 6

x = Conv2D(256, (3,3), strides=(1,1), padding='same', name='conv_6', use_bias=False)(x)

x = BatchNormalization(name='norm_6')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 7

x = Conv2D(128, (1,1), strides=(1,1), padding='same', name='conv_7', use_bias=False)(x)

x = BatchNormalization(name='norm_7')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 8

x = Conv2D(256, (3,3), strides=(1,1), padding='same', name='conv_8', use_bias=False)(x)

x = BatchNormalization(name='norm_8')(x)

x = LeakyReLU(alpha=0.1)(x)

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 9

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_9', use_bias=False)(x)

x = BatchNormalization(name='norm_9')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 10

x = Conv2D(256, (1,1), strides=(1,1), padding='same', name='conv_10', use_bias=False)(x)

x = BatchNormalization(name='norm_10')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 11

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_11', use_bias=False)(x)

x = BatchNormalization(name='norm_11')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 12

x = Conv2D(256, (1,1), strides=(1,1), padding='same', name='conv_12', use_bias=False)(x)

x = BatchNormalization(name='norm_12')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 13

x = Conv2D(512, (3,3), strides=(1,1), padding='same', name='conv_13', use_bias=False)(x)

x = BatchNormalization(name='norm_13')(x)

x = LeakyReLU(alpha=0.1)(x)

skip_connection = x

x = MaxPooling2D(pool_size=(2, 2))(x)

# Layer 14

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_14', use_bias=False)(x)

x = BatchNormalization(name='norm_14')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 15

x = Conv2D(512, (1,1), strides=(1,1), padding='same', name='conv_15', use_bias=False)(x)

x = BatchNormalization(name='norm_15')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 16

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_16', use_bias=False)(x)

x = BatchNormalization(name='norm_16')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 17

x = Conv2D(512, (1,1), strides=(1,1), padding='same', name='conv_17', use_bias=False)(x)

x = BatchNormalization(name='norm_17')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 18

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_18', use_bias=False)(x)

x = BatchNormalization(name='norm_18')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 19

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_19', use_bias=False)(x)

x = BatchNormalization(name='norm_19')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 20

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_20', use_bias=False)(x)

x = BatchNormalization(name='norm_20')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 21

skip_connection = Conv2D(64, (1,1), strides=(1,1), padding='same', name='conv_21', use_bias=False)(skip_connection)

skip_connection = BatchNormalization(name='norm_21')(skip_connection)

skip_connection = LeakyReLU(alpha=0.1)(skip_connection)

skip_connection = Lambda(space_to_depth_x2)(skip_connection)

x = concatenate([skip_connection, x])

# Layer 22

x = Conv2D(1024, (3,3), strides=(1,1), padding='same', name='conv_22', use_bias=False)(x)

x = BatchNormalization(name='norm_22')(x)

x = LeakyReLU(alpha=0.1)(x)

# Layer 23

x = Conv2D(BOX * (4 + 1 + CLASS), (1,1), strides=(1,1), padding='same', name='conv_23')(x)

output = Reshape((GRID_H, GRID_W, BOX, 4 + 1 + CLASS))(x)

# small hack to allow true_boxes to be registered when Keras build the model

# for more information: https://github.com/fchollet/keras/issues/2790

output = Lambda(lambda args: args[0])([output, true_boxes])

model = Model([input_image, true_boxes], output)

model.summary()

Model: "model_1"

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_3 (InputLayer) [(None, 416, 416, 3) 0

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_1 (Conv2D) (None, 416, 416, 32) 864 input_ 3[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_1 (BatchNormalization) (None, 416, 416, 32) 128 conv_ 1[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_22 (LeakyReLU) (None, 416, 416, 32) 0 norm_ 1[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

max_pooling2d_ 5 (MaxPooling2D) (None, 208, 208, 32) 0 leaky_re_ lu_22[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_2 (Conv2D) (None, 208, 208, 64) 18432 max_ pooling2d_5[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_2 (BatchNormalization) (None, 208, 208, 64) 256 conv_ 2[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_23 (LeakyReLU) (None, 208, 208, 64) 0 norm_ 2[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

max_pooling2d_ 6 (MaxPooling2D) (None, 104, 104, 64) 0 leaky_re_ lu_23[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_3 (Conv2D) (None, 104, 104, 128 73728 max_ pooling2d_6[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_3 (BatchNormalization) (None, 104, 104, 128 512 conv_ 3[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_24 (LeakyReLU) (None, 104, 104, 128 0 norm_ 3[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_4 (Conv2D) (None, 104, 104, 64) 8192 leaky_ re_lu_ 24[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_4 (BatchNormalization) (None, 104, 104, 64) 256 conv_ 4[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_25 (LeakyReLU) (None, 104, 104, 64) 0 norm_ 4[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_5 (Conv2D) (None, 104, 104, 128 73728 leaky_ re_lu_ 25[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_5 (BatchNormalization) (None, 104, 104, 128 512 conv_ 5[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_26 (LeakyReLU) (None, 104, 104, 128 0 norm_ 5[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

max_pooling2d_ 7 (MaxPooling2D) (None, 52, 52, 128) 0 leaky_re_ lu_26[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_6 (Conv2D) (None, 52, 52, 256) 294912 max_ pooling2d_7[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_6 (BatchNormalization) (None, 52, 52, 256) 1024 conv_ 6[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_27 (LeakyReLU) (None, 52, 52, 256) 0 norm_ 6[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_7 (Conv2D) (None, 52, 52, 128) 32768 leaky_ re_lu_ 27[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_7 (BatchNormalization) (None, 52, 52, 128) 512 conv_ 7[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_28 (LeakyReLU) (None, 52, 52, 128) 0 norm_ 7[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_8 (Conv2D) (None, 52, 52, 256) 294912 leaky_ re_lu_ 28[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_8 (BatchNormalization) (None, 52, 52, 256) 1024 conv_ 8[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_29 (LeakyReLU) (None, 52, 52, 256) 0 norm_ 8[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

max_pooling2d_ 8 (MaxPooling2D) (None, 26, 26, 256) 0 leaky_re_ lu_29[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_9 (Conv2D) (None, 26, 26, 512) 1179648 max_ pooling2d_8[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_9 (BatchNormalization) (None, 26, 26, 512) 2048 conv_ 9[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_30 (LeakyReLU) (None, 26, 26, 512) 0 norm_ 9[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_10 (Conv2D) (None, 26, 26, 256) 131072 leaky_ re_lu_ 30[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_10 (BatchNormalization) (None, 26, 26, 256) 1024 conv_ 10[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_31 (LeakyReLU) (None, 26, 26, 256) 0 norm_ 10[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_11 (Conv2D) (None, 26, 26, 512) 1179648 leaky_ re_lu_ 31[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_11 (BatchNormalization) (None, 26, 26, 512) 2048 conv_ 11[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_32 (LeakyReLU) (None, 26, 26, 512) 0 norm_ 11[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_12 (Conv2D) (None, 26, 26, 256) 131072 leaky_ re_lu_ 32[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_12 (BatchNormalization) (None, 26, 26, 256) 1024 conv_ 12[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_33 (LeakyReLU) (None, 26, 26, 256) 0 norm_ 12[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_13 (Conv2D) (None, 26, 26, 512) 1179648 leaky_ re_lu_ 33[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_13 (BatchNormalization) (None, 26, 26, 512) 2048 conv_ 13[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_34 (LeakyReLU) (None, 26, 26, 512) 0 norm_ 13[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

max_pooling2d_ 9 (MaxPooling2D) (None, 13, 13, 512) 0 leaky_re_ lu_34[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_14 (Conv2D) (None, 13, 13, 1024) 4718592 max_ pooling2d_9[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_14 (BatchNormalization) (None, 13, 13, 1024) 4096 conv_ 14[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_35 (LeakyReLU) (None, 13, 13, 1024) 0 norm_ 14[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_15 (Conv2D) (None, 13, 13, 512) 524288 leaky_ re_lu_ 35[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_15 (BatchNormalization) (None, 13, 13, 512) 2048 conv_ 15[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_36 (LeakyReLU) (None, 13, 13, 512) 0 norm_ 15[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_16 (Conv2D) (None, 13, 13, 1024) 4718592 leaky_ re_lu_ 36[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_16 (BatchNormalization) (None, 13, 13, 1024) 4096 conv_ 16[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_37 (LeakyReLU) (None, 13, 13, 1024) 0 norm_ 16[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_17 (Conv2D) (None, 13, 13, 512) 524288 leaky_ re_lu_ 37[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_17 (BatchNormalization) (None, 13, 13, 512) 2048 conv_ 17[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_38 (LeakyReLU) (None, 13, 13, 512) 0 norm_ 17[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_18 (Conv2D) (None, 13, 13, 1024) 4718592 leaky_ re_lu_ 38[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_18 (BatchNormalization) (None, 13, 13, 1024) 4096 conv_ 18[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_39 (LeakyReLU) (None, 13, 13, 1024) 0 norm_ 18[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_19 (Conv2D) (None, 13, 13, 1024) 9437184 leaky_ re_lu_ 39[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_19 (BatchNormalization) (None, 13, 13, 1024) 4096 conv_ 19[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_21 (Conv2D) (None, 26, 26, 64) 32768 leaky_ re_lu_ 34[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_40 (LeakyReLU) (None, 13, 13, 1024) 0 norm_ 19[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_21 (BatchNormalization) (None, 26, 26, 64) 256 conv_ 21[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_20 (Conv2D) (None, 13, 13, 1024) 9437184 leaky_ re_lu_ 40[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_42 (LeakyReLU) (None, 26, 26, 64) 0 norm_ 21[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_20 (BatchNormalization) (None, 13, 13, 1024) 4096 conv_ 20[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

lambda_2 (Lambda) (None, 13, 13, 256) 0 leaky_ re_lu_ 42[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_41 (LeakyReLU) (None, 13, 13, 1024) 0 norm_ 20[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

concatenate_1 (Concatenate) (None, 13, 13, 1280) 0 lambda_ 2[0 ][0 ]

leaky_re_lu_41[0][0]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_22 (Conv2D) (None, 13, 13, 1024) 11796480 concatenate_ 1[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

norm_22 (BatchNormalization) (None, 13, 13, 1024) 4096 conv_ 22[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

leaky_re_ lu_43 (LeakyReLU) (None, 13, 13, 1024) 0 norm_ 22[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

conv_23 (Conv2D) (None, 13, 13, 100) 102500 leaky_ re_lu_ 43[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

reshape_1 (Reshape) (None, 13, 13, 4, 25 0 conv_ 23[0 ][0 ]

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

input_4 (InputLayer) [(None, 1, 1, 1, 50, 0

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

lambda_3 (Lambda) (None, 13, 13, 4, 25 0 reshape_ 1[0 ][0 ]

input_4[0][0]

==================================================================================================

Total params: 50,650,436

Trainable params: 50,629,764

Non-trainable params: 20,672

_____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ _____ ___

Next, we download the pre-trained weights for YOLO V2.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

!wget https://pjreddie.com/media/files/yolov2.weights

--2020-07-06 21 :02:41-- https://pjreddie.com/media/files/yolov2.weights

Resolving pjreddie.com (pjreddie.com)... 128.208 .4 .108

Connecting to pjreddie.com (pjreddie.com)|128.208.4.108|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 203934260 (194M) [application/octet-stream]

Saving to: ‘yolov2.weights.1’

yolov2.weights.1 100 %[===================>] 194. 49M 867KB/s in 3m 6s

2020 -07 -06 21 :05:47 (1.05 MB/s) - ‘yolov2.weights.1’ saved [203934260/203934260]

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

path_to_weight = "./yolov2.weights"

class WeightReader:

def __init__(self, weight_file):

self.offset = 4

self.all_weights = np.fromfile(weight_file, dtype='float32')

def read_bytes(self, size):

self.offset = self.offset + size

return self.all_weights[self.offset-size:self.offset]

def reset(self):

self.offset = 4

weight_reader = WeightReader(path_to_weight)

print("all_weights.shape = {}".format(weight_reader.all_weights.shape))

all_weights.shape = (50983565 ,)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

weight_reader.reset()

nb_conv = 23

for i in range(1, nb_conv+1):

conv_layer = model.get_layer('conv_' + str(i))

if i < nb_conv:

norm_layer = model.get_layer('norm_' + str(i))

size = np.prod(norm_layer.get_weights()[0].shape)

beta = weight_reader.read_bytes(size)

gamma = weight_reader.read_bytes(size)

mean = weight_reader.read_bytes(size)

var = weight_reader.read_bytes(size)

weights = norm_layer.set_weights([gamma, beta, mean, var])

if len(conv_layer.get_weights()) > 1:

bias = weight_reader.read_bytes(np.prod(conv_layer.get_weights()[1].shape))

kernel = weight_reader.read_bytes(np.prod(conv_layer.get_weights()[0].shape))

kernel = kernel.reshape(list(reversed(conv_layer.get_weights()[0].shape)))

kernel = kernel.transpose([2,3,1,0])

conv_layer.set_weights([kernel, bias])

else:

kernel = weight_reader.read_bytes(np.prod(conv_layer.get_weights()[0].shape))

kernel = kernel.reshape(list(reversed(conv_layer.get_weights()[0].shape)))

kernel = kernel.transpose([2,3,1,0])

conv_layer.set_weights([kernel])

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

layer = model.layers[-4] # the last convolutional layer

weights = layer.get_weights()

new_kernel = np.random.normal(size=weights[0].shape)/(GRID_H*GRID_W)

new_bias = np.random.normal(size=weights[1].shape)/(GRID_H*GRID_W)

layer.set_weights([new_kernel, new_bias])

Define a custom learning rate scheduler The paper uses different learning rates for different epochs. So we define a custom Callback function for the learning rate.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

from tensorflow import keras

class CustomLearningRateScheduler(keras.callbacks.Callback):

def __init__(self, schedule):

super(CustomLearningRateScheduler, self).__init__()

self.schedule = schedule

def on_epoch_begin(self, epoch, logs=None):

if not hasattr(self.model.optimizer, "lr"):

raise ValueError('Optimizer must have a "lr" attribute.')

# Get the current learning rate from model's optimizer.

lr = float(tf.keras.backend.get_value(self.model.optimizer.learning_rate))

# Call schedule function to get the scheduled learning rate.

scheduled_lr = self.schedule(epoch, lr)

# Set the value back to the optimizer before this epoch starts

tf.keras.backend.set_value(self.model.optimizer.lr, scheduled_lr)

print("\nEpoch %05d: Learning rate is %6.4f." % (epoch, scheduled_lr))

LR_SCHEDULE = [

# (epoch to start, learning rate) tuples

(0, 0.01),

(75, 0.001),

(105, 0.0001),

]

def lr_schedule(epoch, lr):

"""Helper function to retrieve the scheduled learning rate based on epoch."""

if epoch < LR_SCHEDULE[0][0] or epoch > LR_SCHEDULE[-1][0]:

return lr

for i in range(len(LR_SCHEDULE)):

if epoch == LR_SCHEDULE[i][0]:

return LR_SCHEDULE[i][1]

return lr

Define the loss function Next, we would be defining a custom loss function to be used in the model. Take a look at this blog post to understand more about the

loss function used in YOLO .

I understood the loss function but didn’t implement it on my own. I took the implementation as it is from the above

blog post .

The original blog post was using Tensorflow 1.x so I had to update some of the code to make it run it on Tensorflow 2.x.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

LAMBDA_NO_OBJECT = 1.0

LAMBDA_OBJECT = 5.0

LAMBDA_COORD = 1.0

LAMBDA_CLASS = 1.0

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def get_cell_grid(GRID_W,GRID_H,BATCH_SIZE,BOX):

'''

Helper function to assure that the bounding box x and y are in the grid cell scale

== output ==

for any i=0,1..,batch size - 1

output[i,5,3,:,:] = array([[3., 5.],

[3., 5.],

[3., 5.]], dtype=float32)

'''

## cell_x.shape = (1, 13, 13, 1, 1)

## cell_x[:,i,j,:] = [[[j]]]

cell_x = tf.cast(tf.reshape(tf.tile(tf.range(GRID_W), [GRID_H]), (1, GRID_H, GRID_W, 1, 1)), tf.float32)

## cell_y.shape = (1, 13, 13, 1, 1)

## cell_y[:,i,j,:] = [[[i]]]

cell_y = tf.transpose(cell_x, (0,2,1,3,4))

## cell_gird.shape = (16, 13, 13, 5, 2)

## for any n, k, i, j

## cell_grid[n, i, j, anchor, k] = j when k = 0

## for any n, k, i, j

## cell_grid[n, i, j, anchor, k] = i when k = 1

cell_grid = tf.tile(tf.concat([cell_x,cell_y], -1), [BATCH_SIZE, 1, 1, BOX, 1])

return(cell_grid)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def adjust_scale_prediction(y_pred, cell_grid, ANCHORS):

"""

Adjust prediction

== input ==

y_pred : takes any real values

tensor of shape = (N batch, NGrid h, NGrid w, NAnchor, 4 + 1 + N class)

ANCHORS : list containing width and height specializaiton of anchor box

== output ==

pred_box_xy : shape = (N batch, N grid x, N grid y, N anchor, 2), contianing [center_y, center_x] rangining [0,0]x[grid_H-1,grid_W-1]

pred_box_xy[irow,igrid_h,igrid_w,ianchor,0] = center_x

pred_box_xy[irow,igrid_h,igrid_w,ianchor,1] = center_1

calculation process:

tf.sigmoid(y_pred[...,:2]) : takes values between 0 and 1

tf.sigmoid(y_pred[...,:2]) + cell_grid : takes values between 0 and grid_W - 1 for x coordinate

takes values between 0 and grid_H - 1 for y coordinate

pred_Box_wh : shape = (N batch, N grid h, N grid w, N anchor, 2), containing width and height, rangining [0,0]x[grid_H-1,grid_W-1]

pred_box_conf : shape = (N batch, N grid h, N grid w, N anchor, 1), containing confidence to range between 0 and 1

pred_box_class : shape = (N batch, N grid h, N grid w, N anchor, N class), containing

"""

BOX = int(len(ANCHORS)/2)

## cell_grid is of the shape of

### adjust x and y

# the bounding box bx and by are rescaled to range between 0 and 1 for given gird.

# Since there are BOX x BOX grids, we rescale each bx and by to range between 0 to BOX + 1

pred_box_xy = tf.sigmoid(y_pred[..., :2]) + cell_grid # bx, by

### adjust w and h

# exp to make width and height positive

# rescale each grid to make some anchor "good" at representing certain shape of bounding box

pred_box_wh = tf.exp(y_pred[..., 2:4]) * np.reshape(ANCHORS,[1,1,1,BOX,2]) # bw, bh

### adjust confidence

pred_box_conf = tf.sigmoid(y_pred[..., 4])# prob bb

### adjust class probabilities

pred_box_class = y_pred[..., 5:] # prC1, prC2, ..., prC20

return(pred_box_xy,pred_box_wh,pred_box_conf,pred_box_class)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def print_min_max(vec,title):

try:

print("{} MIN={:5.2f}, MAX={:5.2f}".format(

title,np.min(vec),np.max(vec)))

except ValueError: #raised if `y` is empty.

pass

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

print("*"*30)

print("prepare inputs")

GRID_W = 13

GRID_H = 13

BOX = int(len(ANCHORS)/2)

CLASS = len(LABELS)

size = BATCH_SIZE*GRID_W*GRID_H*BOX*(4 + 1 + CLASS)

y_pred = np.random.normal(size=size,scale = 10/(GRID_H*GRID_W))

y_pred = y_pred.reshape(BATCH_SIZE,GRID_H,GRID_W,BOX,4 + 1 + CLASS)

print("y_pred before scaling = {}".format(y_pred.shape))

print("*"*30)

print("define tensor graph")

y_pred_tf = tf.constant(y_pred,dtype="float32")

cell_grid = get_cell_grid(GRID_W,GRID_H,BATCH_SIZE,BOX)

(pred_box_xy, pred_box_wh, pred_box_conf, pred_box_class) = adjust_scale_prediction(y_pred_tf,

cell_grid,

ANCHORS)

print("*"*30 + "\nouput\n" + "*"*30)

print("\npred_box_xy {}".format(pred_box_xy.shape))

for igrid_w in range(pred_box_xy.shape[2]):

print_min_max(pred_box_xy[:,:,igrid_w,:,0],

" bounding box x at iGRID_W={:02.0f}".format(igrid_w))

for igrid_h in range(pred_box_xy.shape[1]):

print_min_max(pred_box_xy[:,igrid_h,:,:,1],

" bounding box y at iGRID_H={:02.0f}".format(igrid_h))

print("\npred_box_wh {}".format(pred_box_wh.shape))

print_min_max(pred_box_wh[:,:,:,:,0]," bounding box width ")

print_min_max(pred_box_wh[:,:,:,:,1]," bounding box height")

print("\npred_box_conf {}".format(pred_box_conf.shape))

print_min_max(pred_box_conf," confidence ")

print("\npred_box_class {}".format(pred_box_class.shape))

print_min_max(pred_box_class," class probability")

******************************

prepare inputs

y_pred before scaling = (16, 13 , 13 , 4 , 25 )

******************************

define tensor graph

******************************

ouput

******************************

pred_box_xy (16, 13 , 13 , 4 , 2 )

bounding box x at iGRID_W=00 MIN= 0.45 , MAX= 0.55

bounding box x at iGRID_W=01 MIN= 1.45 , MAX= 1.54

bounding box x at iGRID_W=02 MIN= 2.45 , MAX= 2.55

bounding box x at iGRID_W=03 MIN= 3.45 , MAX= 3.55

bounding box x at iGRID_W=04 MIN= 4.45 , MAX= 4.55

bounding box x at iGRID_W=05 MIN= 5.45 , MAX= 5.55

bounding box x at iGRID_W=06 MIN= 6.46 , MAX= 6.55

bounding box x at iGRID_W=07 MIN= 7.45 , MAX= 7.55

bounding box x at iGRID_W=08 MIN= 8.46 , MAX= 8.55

bounding box x at iGRID_W=09 MIN= 9.44 , MAX= 9.55

bounding box x at iGRID_W=10 MIN=10.46, MAX=10.55

bounding box x at iGRID_W=11 MIN=11.46, MAX=11.55

bounding box x at iGRID_W=12 MIN=12.45, MAX=12.55

bounding box y at iGRID_H=00 MIN= 0.45 , MAX= 0.55

bounding box y at iGRID_H=01 MIN= 1.45 , MAX= 1.54

bounding box y at iGRID_H=02 MIN= 2.46 , MAX= 2.54

bounding box y at iGRID_H=03 MIN= 3.45 , MAX= 3.55

bounding box y at iGRID_H=04 MIN= 4.45 , MAX= 4.54

bounding box y at iGRID_H=05 MIN= 5.45 , MAX= 5.54

bounding box y at iGRID_H=06 MIN= 6.45 , MAX= 6.55

bounding box y at iGRID_H=07 MIN= 7.45 , MAX= 7.55

bounding box y at iGRID_H=08 MIN= 8.46 , MAX= 8.54

bounding box y at iGRID_H=09 MIN= 9.46 , MAX= 9.55

bounding box y at iGRID_H=10 MIN=10.45, MAX=10.54

bounding box y at iGRID_H=11 MIN=11.46, MAX=11.54

bounding box y at iGRID_H=12 MIN=12.45, MAX=12.54

pred_box_wh (16, 13 , 13 , 4 , 2 )

bounding box width MIN= 0.88 , MAX=12.49

bounding box height MIN= 1.46 , MAX=12.64

pred_box_conf (16, 13 , 13 , 4 )

confidence MIN= 0.45 , MAX= 0.56

pred_box_class (16, 13 , 13 , 4 , 20 )

class probability MIN=-0.26, MAX= 0.28

We extract the ground truth.

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def extract_ground_truth(y_true):

true_box_xy = y_true[..., 0:2] # bounding box x, y coordinate in grid cell scale

true_box_wh = y_true[..., 2:4] # number of cells accross, horizontally and vertically

true_box_conf = y_true[...,4] # confidence

true_box_class = tf.argmax(y_true[..., 5:], -1)

return(true_box_xy, true_box_wh, true_box_conf, true_box_class)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

# y_batch is the output of the simpleBatchGenerator.fit()

print("Input y_batch = {}".format(y_batch.shape))

y_batch_tf = tf.constant(y_batch,dtype="float32")

(true_box_xy, true_box_wh,

true_box_conf, true_box_class) = extract_ground_truth(y_batch_tf)

print("*"*30 + "\nouput\n" + "*"*30)

print("\ntrue_box_xy {}".format(true_box_xy.shape))

for igrid_w in range(true_box_xy.shape[2]):

vec = true_box_xy[:,:,igrid_w,:,0]

pick = true_box_conf[:,:,igrid_w,:] == 1 ## only pick C_ij = 1

print_min_max(vec[pick]," bounding box x at iGRID_W={:02.0f}".format(igrid_w))

for igrid_h in range(true_box_xy.shape[1]):

vec = true_box_xy[:,igrid_h,:,:,1]

pick = true_box_conf[:,igrid_h,:,:] == 1 ## only pick C_ij = 1

print_min_max(vec[pick]," bounding box y at iGRID_H={:02.0f}".format(igrid_h))

print("\ntrue_box_wh {}".format(true_box_wh.shape))

print_min_max(true_box_wh[:,:,:,:,0]," bounding box width ")

print_min_max(true_box_wh[:,:,:,:,1]," bounding box height")

print("\ntrue_box_conf {}".format(true_box_conf.shape))

print(" confidence, unique value = {}".format(np.unique(true_box_conf)))

print("\ntrue_box_class {}".format(true_box_class.shape))

print(" class index, unique value = {}".format(np.unique(true_box_class)) )

Input y_batch = (16, 13 , 13 , 4 , 25 )

******************************

ouput

******************************

true_box_xy (16, 13 , 13 , 4 , 2 )

bounding box x at iGRID_W=01 MIN= 1.56 , MAX= 1.56

bounding box x at iGRID_W=02 MIN= 2.36 , MAX= 2.36

bounding box x at iGRID_W=03 MIN= 3.09 , MAX= 3.41

bounding box x at iGRID_W=05 MIN= 5.00 , MAX= 5.94

bounding box x at iGRID_W=06 MIN= 6.22 , MAX= 6.67

bounding box x at iGRID_W=07 MIN= 7.66 , MAX= 7.66

bounding box x at iGRID_W=08 MIN= 8.56 , MAX= 8.86

bounding box x at iGRID_W=09 MIN= 9.09 , MAX= 9.39

bounding box y at iGRID_H=01 MIN= 1.58 , MAX= 1.58

bounding box y at iGRID_H=05 MIN= 5.34 , MAX= 5.42

bounding box y at iGRID_H=06 MIN= 6.50 , MAX= 6.91

bounding box y at iGRID_H=07 MIN= 7.02 , MAX= 7.38

bounding box y at iGRID_H=08 MIN= 8.08 , MAX= 8.64

bounding box y at iGRID_H=09 MIN= 9.20 , MAX= 9.88

bounding box y at iGRID_H=10 MIN=10.14, MAX=10.36

bounding box y at iGRID_H=11 MIN=11.11, MAX=11.42

true_box_wh (16, 13 , 13 , 4 , 2 )

bounding box width MIN= 0.00 , MAX=12.97

bounding box height MIN= 0.00 , MAX=13.00

true_box_conf (16, 13 , 13 , 4 )

confidence, unique value = [0. 1 .]

true_box_class (16, 13 , 13 , 4 )

class index, unique value = [ 0 2 6 7 8 11 14 15 17 19 ]

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def calc_loss_xywh(true_box_conf, COORD_SCALE, true_box_xy, pred_box_xy, true_box_wh, pred_box_wh):

coord_mask = tf.expand_dims(true_box_conf, axis=-1) * LAMBDA_COORD

nb_coord_box = tf.reduce_sum(tf.cast(coord_mask > 0.0, tf.float32))

loss_xy = tf.reduce_sum(tf.square(true_box_xy-pred_box_xy) * coord_mask) / (nb_coord_box + 1e-6) / 2.

loss_wh = tf.reduce_sum(tf.square(true_box_wh-pred_box_wh) * coord_mask) / (nb_coord_box + 1e-6) / 2.

return (loss_xy + loss_wh, coord_mask)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

LAMBDA_COORD = 1

loss_xywh, coord_mask = calc_loss_xywh(true_box_conf, LAMBDA_COORD, true_box_xy, pred_box_xy,true_box_wh, pred_box_wh)

print("*"*30 + "\nouput\n" + "*"*30)

print("loss_xywh = {:4.3f}".format(loss_xywh))

***** ***** ***** ***** ***** *****

ouput

***** ***** ***** ***** ***** *****

loss_xywh = 4.148

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def calc_loss_class(true_box_conf,CLASS_SCALE, true_box_class,pred_box_class):

'''

== input ==

true_box_conf : tensor of shape (N batch, N grid h, N grid w, N anchor)

true_box_class : tensor of shape (N batch, N grid h, N grid w, N anchor), containing class index

pred_box_class : tensor of shape (N batch, N grid h, N grid w, N anchor, N class)

CLASS_SCALE : 1.0

== output ==

class_mask

if object exists in this (grid_cell, anchor) pair and the class object receive nonzero weight

class_mask[iframe,igridy,igridx,ianchor] = 1

else:

0

'''

class_mask = true_box_conf * CLASS_SCALE ## L_{i,j}^obj * lambda_class

nb_class_box = tf.reduce_sum(tf.cast(class_mask > 0.0, tf.float32))

loss_class = tf.nn.sparse_softmax_cross_entropy_with_logits(labels = true_box_class,

logits = pred_box_class)

loss_class = tf.reduce_sum(loss_class * class_mask) / (nb_class_box + 1e-6)

return(loss_class)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

LAMBDA_CLASS = 1

loss_class = calc_loss_class(true_box_conf,LAMBDA_CLASS,

true_box_class,pred_box_class)

print("*"*30 + "\nouput\n" + "*"*30)

print("loss_class = {:4.3f}".format(loss_class))

***** ***** ***** ***** ***** *****

ouput

***** ***** ***** ***** ***** *****

loss_class = 3.018

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def get_intersect_area(true_xy,true_wh,

pred_xy,pred_wh):

'''

== INPUT ==

true_xy,pred_xy, true_wh and pred_wh must have the same shape length

p1 : pred_mins = (px1,py1)

p2 : pred_maxs = (px2,py2)

t1 : true_mins = (tx1,ty1)

t2 : true_maxs = (tx2,ty2)

p1______________________

| t1___________ |

| | | |

|_______|___________|__|p2

| |rmax

|___________|

t2

intersect_mins : rmin = t1 = (tx1,ty1)

intersect_maxs : rmax = (rmaxx,rmaxy)

intersect_wh : (rmaxx - tx1, rmaxy - ty1)

'''

true_wh_half = true_wh / 2.

true_mins = true_xy - true_wh_half

true_maxes = true_xy + true_wh_half

pred_wh_half = pred_wh / 2.

pred_mins = pred_xy - pred_wh_half

pred_maxes = pred_xy + pred_wh_half

intersect_mins = tf.maximum(pred_mins, true_mins)

intersect_maxes = tf.minimum(pred_maxes, true_maxes)

intersect_wh = tf.maximum(intersect_maxes - intersect_mins, 0.)

intersect_areas = intersect_wh[..., 0] * intersect_wh[..., 1]

true_areas = true_wh[..., 0] * true_wh[..., 1]

pred_areas = pred_wh[..., 0] * pred_wh[..., 1]

union_areas = pred_areas + true_areas - intersect_areas

iou_scores = tf.truediv(intersect_areas, union_areas)

return(iou_scores)

def calc_IOU_pred_true_assigned(true_box_conf,

true_box_xy, true_box_wh,

pred_box_xy, pred_box_wh):

'''

== input ==

true_box_conf : tensor of shape (N batch, N grid h, N grid w, N anchor )

true_box_xy : tensor of shape (N batch, N grid h, N grid w, N anchor , 2)

true_box_wh : tensor of shape (N batch, N grid h, N grid w, N anchor , 2)

pred_box_xy : tensor of shape (N batch, N grid h, N grid w, N anchor , 2)

pred_box_wh : tensor of shape (N batch, N grid h, N grid w, N anchor , 2)

== output ==

true_box_conf : tensor of shape (N batch, N grid h, N grid w, N anchor)

true_box_conf value depends on the predicted values

true_box_conf = IOU_{true,pred} if objecte exist in this anchor else 0

'''

iou_scores = get_intersect_area(true_box_xy,true_box_wh,

pred_box_xy,pred_box_wh)

true_box_conf_IOU = iou_scores * true_box_conf

return(true_box_conf_IOU)

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

true_box_conf_IOU = calc_IOU_pred_true_assigned(

true_box_conf,

true_box_xy, true_box_wh,

pred_box_xy, pred_box_wh)

print("*"*30 + "\ninput\n" + "*"*30)

print("true_box_conf = {}".format(true_box_conf))

print("true_box_xy = {}".format(true_box_xy))

print("true_box_wh = {}".format(true_box_wh))

print("pred_box_xy = {}".format(pred_box_xy))

print("pred_box_wh = {}".format(pred_box_wh))

print("*"*30 + "\nouput\n" + "*"*30)

print("true_box_conf_IOU.shape = {}".format(true_box_conf_IOU.shape))

vec = true_box_conf_IOU

pick = vec!=0

vec = vec[pick]

plt.hist(vec)

plt.title("Histogram\nN (%) nonzero true_box_conf_IOU = {} ({:5.2f}%)".format(np.sum(pick),

100*np.mean(pick)))

plt.xlabel("nonzero true_box_conf_IOU")

plt.show()

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

def calc_IOU_pred_true_best(pred_box_xy,pred_box_wh,true_boxes):

'''

== input ==

pred_box_xy : tensor of shape (N batch, N grid h, N grid w, N anchor, 2)

pred_box_wh : tensor of shape (N batch, N grid h, N grid w, N anchor, 2)

true_boxes : tensor of shape (N batch, N grid h, N grid w, N anchor, 2)

== output ==

best_ious

for each iframe,

best_ious[iframe,igridy,igridx,ianchor] contains

the IOU of the object that is most likely included (or best fitted)

within the bounded box recorded in (grid_cell, anchor) pair

NOTE: a same object may be contained in multiple (grid_cell, anchor) pair

from best_ious, you cannot tell how may actual objects are captured as the "best" object

'''

true_xy = true_boxes[..., 0:2] # (N batch, 1, 1, 1, TRUE_BOX_BUFFER, 2)

true_wh = true_boxes[..., 2:4] # (N batch, 1, 1, 1, TRUE_BOX_BUFFER, 2)

pred_xy = tf.expand_dims(pred_box_xy, 4) # (N batch, N grid_h, N grid_w, N anchor, 1, 2)

pred_wh = tf.expand_dims(pred_box_wh, 4) # (N batch, N grid_h, N grid_w, N anchor, 1, 2)

iou_scores = get_intersect_area(true_xy,

true_wh,

pred_xy,